FREE Local LLMs on Apple Silicon | FAST!

Step by step setup guide for a totally local LLM with a ChatGPT-like UI, backend and frontend, and a Docker option.

Temperature/fan on your Mac: https://www.tunabellysoftware.com/tgpro/index.php?fpr=alex (affiliate link)

Run Windows on a Mac: https://prf.hn/click/camref:1100libNI (affiliate)

Use COUPON: ZISKIND10

🛒 Gear Links 🛒

* 🍏💥 New MacBook Air M1 Deal: https://amzn.to/3S59ID8

* 💻🔄 Renewed MacBook Air M1 Deal: https://amzn.to/45K1Gmk

* 🎧⚡ Great 40Gbps T4 enclosure: https://amzn.to/3JNwBGW

* 🛠️🚀 My nvme ssd: https://amzn.to/3YLEySo

* 📦🎮 My gear: https://www.amazon.com/shop/alexziskind

🎥 Related Videos 🎥

* 🌗 RAM torture test on Mac – https://youtu.be/l3zIwPgan7M

* 🛠️ Host the PERFECT Prompt – https://youtu.be/LTL3MSFVpm0

* 🛠️ Set up Conda on Mac – https://youtu.be/2Acht_5_HTo

* 🛠️ Set up Node on Mac – https://youtu.be/AEuI0PBvgfM

* 🤖 INSANE Machine Learning on Neural Engine – https://youtu.be/Y2FOUg_jo7k

* 💰 This is what spending more on a MacBook Pro gets you – https://youtu.be/iLHrYuQjKPU

* 🛠️ Developer productivity Playlist – https://www.youtube.com/playlist?list=PLPwbI_iIX3aQCRdFGM7j4TY_7STfv2aXX

🔗 AI for Coding Playlist: 📚 – https://www.youtube.com/playlist?list=PLPwbI_iIX3aSlUmRtYPfbQHt4n0YaX0qw

Repo

https://github.com/open-webui/open-webui

Docs

https://docs.openwebui.com/

Docker Single Command

docker run -d –network=host -v open-webui:/app/backend/data -e OLLAMA_BASE_URL=http://127.0.0.1:11434 –name open-webui –restart always ghcr.io/open-webui/open-webui:main

— — — — — — — — —

❤️ SUBSCRIBE TO MY YOUTUBE CHANNEL 📺

Click here to subscribe: https://www.youtube.com/@AZisk?sub_confirmation=1

— — — — — — — — —

Join this channel to get access to perks:

https://www.youtube.com/channel/UCajiMK_CY9icRhLepS8_3ug/join

— — — — — — — — —

📱 ALEX ON X: https://twitter.com/digitalix

#machinelearning #llm #softwaredevelopment

by Alex Ziskind

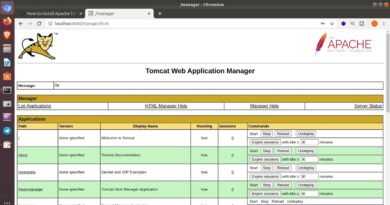

linux web server

I was able to, by tracking down your Conda video, get this running.

I have some web dev and Linux experience, so it wasn’t a huge chore but certainly not easy going in relatively blind.

Great tutorial though. Much thanks.

What do you think of the Snapdragon laptops? rivals to the apple silicon?

lets do some image generation please it would be super helpful

Well be happ to see a tutorial for automatic 1111 ❤

Yes Alex, you will help us more if we could learn with you how on how to add an image generator as well. We thank you for your time and colaboraron. Your channel is a must have subscription in it now-a-days.

Cool! Now I'm an AI developer. 🙃

i have 8gb of ram can i run this. i know this uses gpu but still do i need 16 or 32 gb ram

My 8 gig ram mac just died processing it 🥲

Great video! So are you saying that we can get ChatGPT like quality just faster, more private and for free by running local LLM's on our personal machines? Like, do you feel that this replaces ChatGPT?

Yes I’m interested in an image generation video. I’m running llama3 in Bash, haven’t had time to set up a front end yet. Cool video.

instant sub, great content thank you!

Alex, I love this video very much. Thank you!

do you know if any llm would run on base model M1 MacBook Air (8GB memory)?

Which model is good for programing on JavaScript no a Apple Silicon 16GB?

Yes yes please make a video generation video!!!

Thanks ! Is Macbook air enough for that?

Nice. Image generation and integrating new chatgpt in to this will be great.

When there will be a video to run LLM on an iPhone or iPad? Like using LLMFarm

So cool, and it's free (if we don't count the 4 grands spent for the machine). I'd love to see the images generation

Thanks for the video. There's a one line install for the same thing on Open-webui Github

thanks alex

MBP M1 Pro with 16GB of RAM would be enough to run this?

if ur trying docker, make sure it is version 4.29+, as host network driver (for mac) revealed there as a beta feature

Great video!! And yes, please add a video explaining how to add the images generator.

Great video Alex, is there anyway to have an LLM execute local shell scripts to perform tasks?

Image generation video please