ThursdAI – Jan 31, 2024- Code LLama, Bard is now 2nd best LLM?!, new LLaVa is great at OCR, Herme…

TL;DR of all topics covered + Show notes

* Open Source LLMs

* Meta releases Code-LLama 70B – 67.8% HumanEval (Announcement (https://twitter.com/AIatMeta/status/1752013879532782075) , HF instruct version (https://huggingface.co/codellama/CodeLlama-70b-Instruct-hf) , HuggingChat, Perplexity)

* Together added function calling + JSON mode to Mixtral, Mistral and CodeLLama

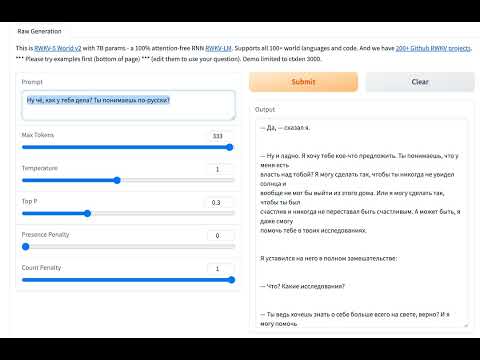

* RWKV (non transformer based) Eagle-7B – (Announcement (https://x.com/RWKV_AI/status/1751797147492888651?s=20) , Demo (https://huggingface.co/spaces/BlinkDL/RWKV-Gradio-2) , Yam’s Thread (https://twitter.com/Yampeleg/status/1751850391480721693) )

* Someone leaks Miqu, Mistral confirms (https://x.com/altryne/status/1752748034180481533?s=20) it’s an old version of their model

* Olmo from Allen Institute – fully open source 7B model (Data, Weights, Checkpoints, Training code) – Announcement (https://allenai.org/olmo)

* Datasets & Embeddings

* Teknium open sources Hermes dataset (Announcement (https://twitter.com/Teknium1/status/1752799124775374928) , Dataset (https://huggingface.co/datasets/teknium/OpenHermes-2.5) , Lilac (https://lilacai-lilac.hf.space/datasets#lilac/OpenHermes-2.5) )

* Lilac announces Garden – LLM powered clustering cloud for datasets (Announcement (https://twitter.com/lilac_ai/status/1752361374640902402) )

* BAAI releases BGE-M3 – Multi-lingual (100+ languages), 8K context, multi functional embeddings (Announcement (https://twitter.com/BAAIBeijing/status/1752182391983280248) , Github (https://github.com/FlagOpen/FlagEmbedding) , technical report (https://github.com/FlagOpen/FlagEmbedding/blob/master/FlagEmbedding/BGE_M3/BGE_M3.pdf) )

* Nomic AI releases Nomic Embed – fully open source embeddings (Announcement (https://twitter.com/nomic_ai/status/1753082063048040829) , Tech Report (https://static.nomic.ai/reports/2024_Nomic_Embed_Text_Technical_Report.pdf) )

* Big CO LLMs + APIs

* Bard with Gemini Pro becomes 2nd LLM in the world per LMsys beating 2 out of 3 GPT4 (Thread (https://twitter.com/lmsysorg/status/1750921228012122526) )

* OpenAI launches GPT mention feature, it’s powerful! (Thread (https://x.com/altryne/status/1752755823212667084?s=20) )

* Vision & Video

* 🔥 LLaVa 1.6 – 34B achieves SOTA vision model for open source models (X (https://twitter.com/imhaotian/status/1752621754273472927) , Announcement (https://llava-vl.github.io/blog/2024-01-30-llava-1-6/) , Demo (https://llava.hliu.cc/) )

* Voice & Audio

* Argmax releases WhisperKit – super optimized (and on device) whisper for IOS/Macs (X (https://twitter.com/reach_vb/status/1752434666659889575) , Blogpost (https://www.takeargmax.com/blog/whisperkit) , Github (https://github.com/argmaxinc/WhisperKit) )

* Tools

* Infinite Craft – Addicting concept combining game using LLama 2 (neal.fun/infinite-craft/ (https://t.co/tb51ejeqsK) )

Haaaapy first of the second month of 2024 folks, how was your Jan? Not too bad I hope? We definitely got quite a show today, the live recording turned into a proceeding of breaking news, authors who came up, deeper interview and of course… news.

This podcast episode is focusing only on the news, but you should know, that we had deeper chats with Eugene (PicoCreator (https://twitter.com/picocreator) ) from RWKV, and a deeper dive into dataset curation and segmentation tool called Lilac, with founders Nikhil (https://twitter.com/nsthorat) & Daniel, and also, we got a breaking news segment and (from ) joined us to talk about the latest open source from AI2 👏

Besides that, oof what a week, started out with the news that the new Bard API (apparently with Gemini Pro + internet access) is now the 2nd best LLM in the world (According to LMSYS at least), then there was the whole thing with Miqu, which turned out to be, yes, a leak from an earlier version of a Mistral model, that leaked, and they acknowledged it, and finally the main release of LLaVa 1.6 to become the SOTA of vision models in the open source was very interesting!

Open Source LLMs

Meta releases CodeLLama 70B

Benches 67% on MMLU (without fine-tuninig) and already available on HuggingChat, Perplexity, TogetherAI, Quantized for MLX on Apple Silicon and has several finetunes, including SQLCoder which beats GPT-4 on SQL (https://x.com/rishdotblog/status/1752329471867371659?s=20)

Has 16K context window, and is one of the top open models for code

Eagle-7B RWKV based model

I was honestly disappointed a bit for the multilingual compared to 1.8B stable LM , but the folks on stage told me to not compare this in a transitional sense to a transformer model ,rather look at the potential here. So we had Eugene, from the RWKV team join on stage and talk through the architecture, the fact that RWKV is the first AI model in the linux foundation and will always be open source, and that they are working on bigger models! That interview will be released soon

Olmo from AI2 – new fully open source 7B model (announcement)

This announcem…

by Alex Volkov

linux foundation